凸优化与线性回归问题

# Gradient Descent & Convex Optimization / 凸优化

2021-08-02

Tags: #MachineLearning #ConvexOptimization #Math

在 这里(和下面的引用里面), 我们特殊的线性规划的损失函数一定是一个凸函数, 那么在其他情况下, 线性规划还是凸函数吗, 线性规划问题会陷入局部最优的问题中去吗?

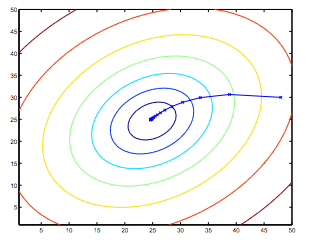

Note that, while gradient descent can be susceptible to local minima in general, the optimization problem we have posed here for linear regression has only one global, and no other local, optima; thus gradient descent always converges (assuming the learning rate α is not too large) to the global minimum. Indeed, J is a convex quadratic function. Here is an example of gradient descent as it is run to minimize a quadratic function.

The ellipses shown above are the contours of a quadratic function.1

- 凸优化问题与机器学习有着很密切的联系, 需要进一步了解