矩阵的求导

# 对矩阵的求导_Matrix_Derivative

Tags: #Matrix #Derivative #Calculus #MachineLearning

- 在学习吴恩达机器学习CS229的时候为了推导Normal Equation的公式, 接触到了函数对于矩阵的求导, 因为许久没有接触微积分, 并且知识跨度太大, 许久没有看懂, 故在此笔记中慢慢梳理.

- Learning Materials:

- Pili HU, Matrix Calculus, https://github.com/hupili/tutorial/tree/master/matrix-calculus

- A Matrix Algebra Approach to Artificial Intelligence by Xian-Da Zhang

- 矩阵求导术

- 矩阵导数计算器

# 不同的情况

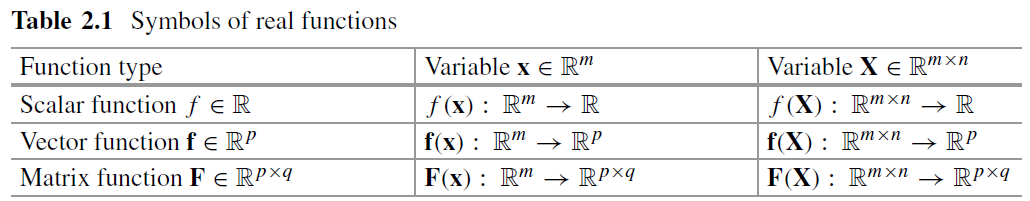

涉及到矩阵的导数运算有以下情况:

- 标量函数(Scalar Function) : 将变量(可能是矩阵或者向量)映射到 $\rightarrow\mathbb{R}_{}$

- 向量函数(Vector Function): 变量$\rightarrow\mathbb{R}_{n}$

- 矩阵函数(Matrix Function): 变量$\rightarrow\mathbb{R}_{m\times n}$

具体的映射表示如下:1

# A Walk Through

# Highlights

矩阵导数与微分的联系 $$d f=\operatorname{tr}\left(\left(\frac{\partial f}{\partial X}\right)^{T} d X\right)$$ ^e0894d

# 与梯度的关系2

从下面的叙述可以看出, 一个$\mathbb R_{m\times n}\mapsto \mathbb R_{n}$函数$f(A)$, 其对于$A$梯度便是对于自变量$A$的导数矩阵.

所以在吴恩达的讲义里面的$\nabla$符号是梯度, 但是严格的来说应该是导数$\large \frac {\partial f} {\partial x}$ Normal_Equation_Proof_2_Matrix_Method

# 实值函数相对于向量和矩阵的梯度

相对于 $\mathrm{n} \times 1$ 向量 $\mathrm{x}$ 的梯度算子记作 $\nabla_{\boldsymbol{x}}$, 定义为 $$ \nabla_{\boldsymbol{x}} \stackrel{\text { def }}{=}\left[\frac{\partial}{\partial x_{1}}, \frac{\partial}{\partial x_{2}}, \cdots, \frac{\partial}{\partial x_{n}}\right]^{T}=\frac{\partial}{\partial \boldsymbol{x}} $$

# 对向量的梯度

以 $\mathrm{n} \times 1$ 实向量 $\mathrm{x}$ 为变元的实标量函数 $\mathrm{f}(\mathrm{x})$ 相对于 $\mathrm{x}$ 的梯度为 $\mathrm{n} \times 1$ 列向量 $\mathbf{x}$, 定义为 $$ \nabla_{\boldsymbol{x}} f(\boldsymbol{x}) \stackrel{\text { def }}{=}\left[\frac{\partial f(\boldsymbol{x})}{\partial x_{1}}, \frac{\partial f(\boldsymbol{x})}{\partial x_{2}}, \cdots, \frac{\partial f(\boldsymbol{x})}{\partial x_{n}}\right]^{T}=\frac{\partial f(\boldsymbol{x})}{\partial \boldsymbol{x}} $$ m维行向量函数 $\boldsymbol{f}(\boldsymbol{x})=\left[f_{1}(\boldsymbol{x}), f_{2}(\boldsymbol{x}), \cdots, f_{m}(\boldsymbol{x})\right]$ 相对于n维实向量 $\mathbf{x}$ 的梯度为 $\mathrm{n} \times \mathrm{m}$ 矩阵, 定义为 $$ \nabla_{\boldsymbol{x}} \boldsymbol{f}(\boldsymbol{x}) \stackrel{\operatorname{def}}{=}\left[\begin{array}{cccc} \frac{\partial f_{1}(\boldsymbol{x})}{\partial x_{1}} & \frac{\partial f_{2}(\boldsymbol{x})}{\partial x_{1}} & \cdots & \frac{\partial f_{m}(\boldsymbol{x})}{\partial x_{1}} \\ \frac{\partial f_{1}(\boldsymbol{x})}{\partial x_{2}} & \frac{\partial f_{2}(\boldsymbol{x})}{\partial x_{2}} & \cdots & \frac{\partial f_{m}(\boldsymbol{x})}{\partial x_{2}} \\ \vdots & \vdots & \ddots & \vdots \\ \frac{\partial f_{1}(\boldsymbol{x})}{\partial x_{n}} & \frac{\partial f_{2}(\boldsymbol{x})}{\partial x_{n}} & \cdots & \frac{\partial f_{m}(\boldsymbol{x})}{\partial x_{n}} \end{array}\right]=\frac{\partial \boldsymbol{f}(\boldsymbol{x})}{\partial \boldsymbol{x}} $$

# 对矩阵的梯度

标量函数 $f(\boldsymbol{A})$ 相对于 $\mathrm{m} \times \mathrm{n}$ 实矩阵 $\mathrm{A}$ 的梯度为 $\mathrm{m} \times \mathrm{n}$ 矩阵, 简称梯度矩阵, 定义为 $$ \nabla_{A} f(\boldsymbol{A})\stackrel{\text { def }}{=}\left[\begin{array}{cccc} \frac{\partial f(A)}{\partial a_{11}} & \frac{\partial f(A)}{\partial a_{12}} & \cdots & \frac{\partial f(A)}{\partial a_{1 n}} \\ \frac{\partial f(A)}{\partial a_{21}} & \frac{\partial f(A)}{\partial a_{22}} & \cdots & \frac{\partial f(A)}{\partial a_{2 n}} \\ \vdots & \vdots & \ddots & \vdots \\ \frac{\partial f(A)}{\partial a_{m 1}} & \frac{\partial f(A)}{\partial a_{m 2}} & \cdots & \frac{\partial f(A)}{\partial a_{m n}} \end{array}\right]=\frac{\partial f(\boldsymbol{A})}{\partial \boldsymbol{A}} $$

# 法则

以下法则适用于实标量函数对向量的梯度以及对矩阵的梯度.

- 线性法则: 若 $f(\boldsymbol{A})$ 和 $g(\boldsymbol{A})$ 分别是矩阵A的实标量函数, $\mathrm{c}{1}$ 和 $\mathrm{c}{2}$ 为实常数, 则

$$ \frac{\partial\left[c_{1} f(\boldsymbol{A})+c_{2} g(\boldsymbol{A})\right]}{\partial \boldsymbol{A}}=c_{1} \frac{\partial f(\boldsymbol{A})}{\partial \boldsymbol{A}}+c_{2} \frac{\partial g(\boldsymbol{A})}{\partial \boldsymbol{A}} $$

- 乘积法则: 若 $f(\boldsymbol{A}), g(\boldsymbol{A})$ 和 $h(\boldsymbol{A})$ 分别是矩阵A的实标量函数, 则

$$ \begin{aligned} &\frac{\partial f(\boldsymbol{A}) g(\boldsymbol{A})}{\partial \boldsymbol{A}}=g(\boldsymbol{A}) \frac{\partial f(\boldsymbol{A})}{\partial \boldsymbol{A}}+f(\boldsymbol{A}) \frac{\partial g(\boldsymbol{A})}{\partial \boldsymbol{A}} \\ &\frac{\partial f(\boldsymbol{A}) g(\boldsymbol{A}) h(\boldsymbol{A})}{\partial \boldsymbol{A}}=g(\boldsymbol{A}) h(\boldsymbol{A}) \frac{\partial f(\boldsymbol{A})}{\partial \boldsymbol{A}}+f(\boldsymbol{A}) h(\boldsymbol{A}) \frac{\partial g(\boldsymbol{A})}{\partial \boldsymbol{A}}+f(\boldsymbol{A}) g(\boldsymbol{A}) \frac{\partial h(\boldsymbol{A})}{\partial \boldsymbol{A}} \end{aligned} $$

- 商法则: 若 $g(\boldsymbol{A}) \neq 0$, 则

$$ \frac{\partial f(\boldsymbol{A}) / g(\boldsymbol{A})}{\partial \boldsymbol{A}}=\frac{1}{g(\boldsymbol{A})^{2}}\left[g(\boldsymbol{A}) \frac{\partial f(\boldsymbol{A})}{\partial \boldsymbol{A}}-f(\boldsymbol{A}) \frac{\partial g(\boldsymbol{A})}{\partial \boldsymbol{A}}\right] $$

- 链式法则:若A为 $m \times n$ 矩阵,且 $y=f(\boldsymbol{A})$ 和 $g(y)$ 分别是以矩阵 $\mathbf{A}$ 和标量 $y$ 为变元的实标量函数, 则

$$ \frac{\partial g(f(\boldsymbol{A}))}{\partial \boldsymbol{A}}=\frac{d g(y)}{d y} \frac{\partial f(\boldsymbol{A})}{\partial \boldsymbol{A}} $$

A Matrix Algebra Approach to Artificial Intelligence ↩︎