D2L-54-Gradient Clipping-梯度剪裁

# Gradient Clipping

2022-04-02

Tags: #GradientClipping

- 梯度剪裁是预防梯度爆炸的一种方法, 它直接给梯度设置一个上限.

$$\mathbf{g} \leftarrow \min \left(1, \frac{\theta}{|\mathbf{g}|}\right) \mathbf{g}$$

- 上面的写法有点绕, 因为为了保持梯度 $\mathbf{g}$ 的方向不变, 剪裁时需要作用于 $\mathbf{g}$ 的每一个分量, 整体上来说其实就是: $$\mathbf{g} \leftarrow \min \left(|\mathbf{g}|, \theta \frac{\mathbf{g}}{|\mathbf{g}|}\right)$$

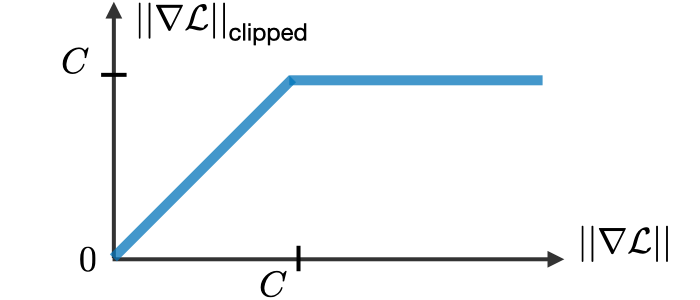

相比直接减小学习率,Clipping是分段的, 可以只在梯度较大时加以限制.

# PyTorch

torch.nn.utils.clip_grad_norm_ — PyTorch 1.11.0 documentation

| |

# D2l 里面的简易实现

| |