D2L-57-LSTM-长短期记忆网络

# Long Short-Term Memory

Tags: #LSTM #DeepLearning #RNN

LSTM是最早用于解决长期依赖问题的一种RNN. 它比GRU复杂, 但是设计思想是一样的. 有趣的是, LSTM(1997)比GRU(2014)早出现近20年.

LSTM和GRU一样, 使用了不同的门(Gate)来控制上一个隐状态在下一个隐状态里面的占比, 也就是有选择地来混合"长期记忆"和"短期记忆", 这也是其名称的由来.

相比GRU(一种隐状态, 2个门), LSTM有两种隐状态: Cell State $\mathbf{C}{t}$ 和Hidden State $\mathbf{H}{t}$, 并且LSTM多一个门(3个).

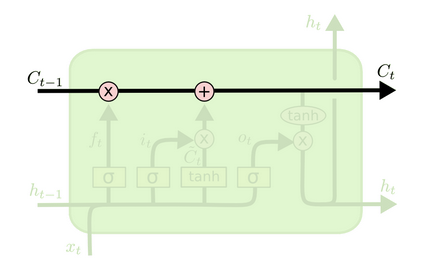

# Cell State

相比传统的RNN, LSTM解决长期依赖的关键部分是新加入的Cell State:

Cell State类似于一个传送带(Conveyor Belt), 信息在其中能够顺畅地流动. 在穿过每一个Cell的时候, 信息只有微小的改变, 这使得网络拥有了长期记忆的能力.1

不过注意只有隐状态才会传递到输出层, 而记忆元 $\mathbf{C}_{t}$ 不直接参与输出计算。

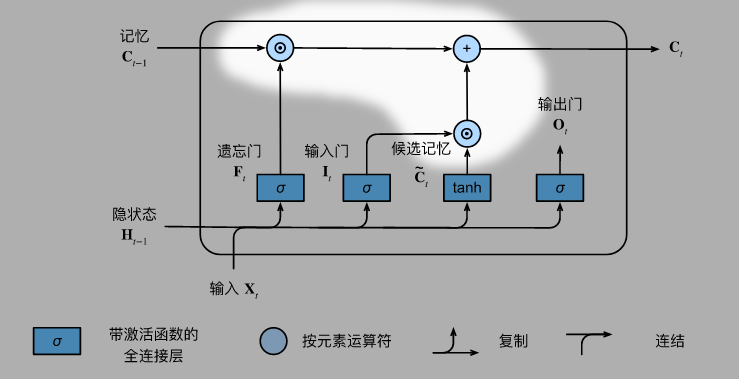

# 3 Gates & 1 Candidate State

# 3 Gates

- 和GRU一样, 前一个隐状态 $\mathbf{H}{t-1}$ 和输入 $\mathbf{X}{t}$ Concatenate起来, 一起作为三个门的输入, 激活函数为Sigmoid: $$\begin{aligned} \mathbf{I}{t} &=\sigma\left(\mathbf{X}{t} \mathbf{W}{x i}+\mathbf{H}{t-1} \mathbf{W}{h i}+\mathbf{b}{i}\right) \\ \mathbf{F}{t} &=\sigma\left(\mathbf{X}{t} \mathbf{W}{x f}+\mathbf{H}{t-1} \mathbf{W}{h f}+\mathbf{b}{f}\right) \\ \mathbf{O}{t} &=\sigma\left(\mathbf{X}{t} \mathbf{W}{x o}+\mathbf{H}{t-1} \mathbf{W}{h o}+\mathbf{b}{o}\right) \end{aligned}$$

# 1 Candidate State

- 和GRU不一样的是: Candidate Cell State直接接受前一个隐状态 $\mathbf{H}{t-1}$ 和输入 $\mathbf{X}{t}$ 作为输入, 而不是先进行"Reset/遗忘"操作:

$$\tilde{\mathbf{C}}{t}=\tanh \left(\mathbf{X}{t} \mathbf{W}{x c}+\mathbf{H}{t-1} \mathbf{W}{h c}+\mathbf{b}{c}\right)$$

# Update Cell State

对上一个Cell State $\mathbf{C}_{t-1}$ 的更新分为两步:

对上一个Cell State $\mathbf{C}_{t-1}$ 的更新分为两步:

# Forget using Forget Gate

$$\mathbf{C}{t}=\textcolor{darkorchid}{\mathbf{F}{t} \odot \mathbf{C}{t-1}}+\mathbf{I}{t} \odot \tilde{\mathbf{C}}_{t}$$ 与Forget Gate按元素相乘,屏蔽掉需要忘记的元素。

# Merge new Candidate State

$$\mathbf{C}{t}=\mathbf{F}{t} \odot \mathbf{C}{t-1}\textcolor{orangered}{+\mathbf{I}{t} \odot \tilde{\mathbf{C}}_{t}}$$ 先将候选Cell State与Input Gate按元素相乘得到需要更新的位置,再和遗忘后的结果相加。

# Output new Hidden State

其实 $\mathbf{H}{t}$ 只是 $\mathbf{C}{t}$ 的门控版本: 先利用tanh调整大小范围到 $(-1,1)$, 再使用Output Gate Mask一遍:

$$\mathbf{H}{t}=\mathbf{O}{t} \odot \tanh \left(\mathbf{C}_{t}\right)$$

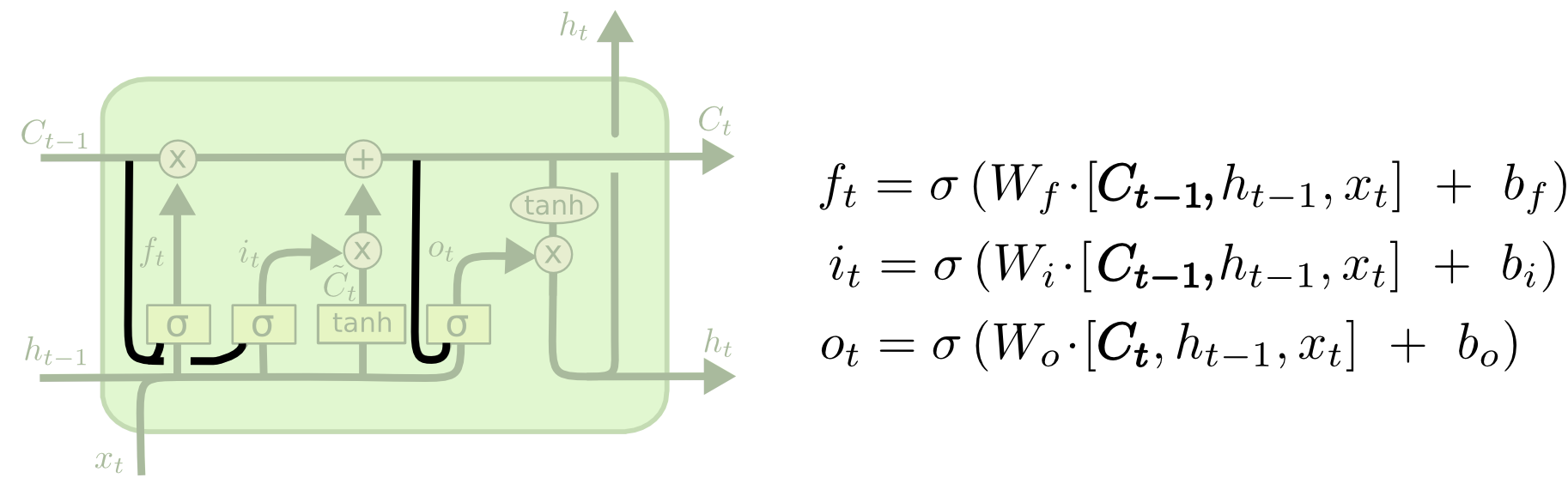

# Variants of LSTM

# Peepholes

All gates can have a peep at the cell state $\mathbf{C}_{t-1}$.

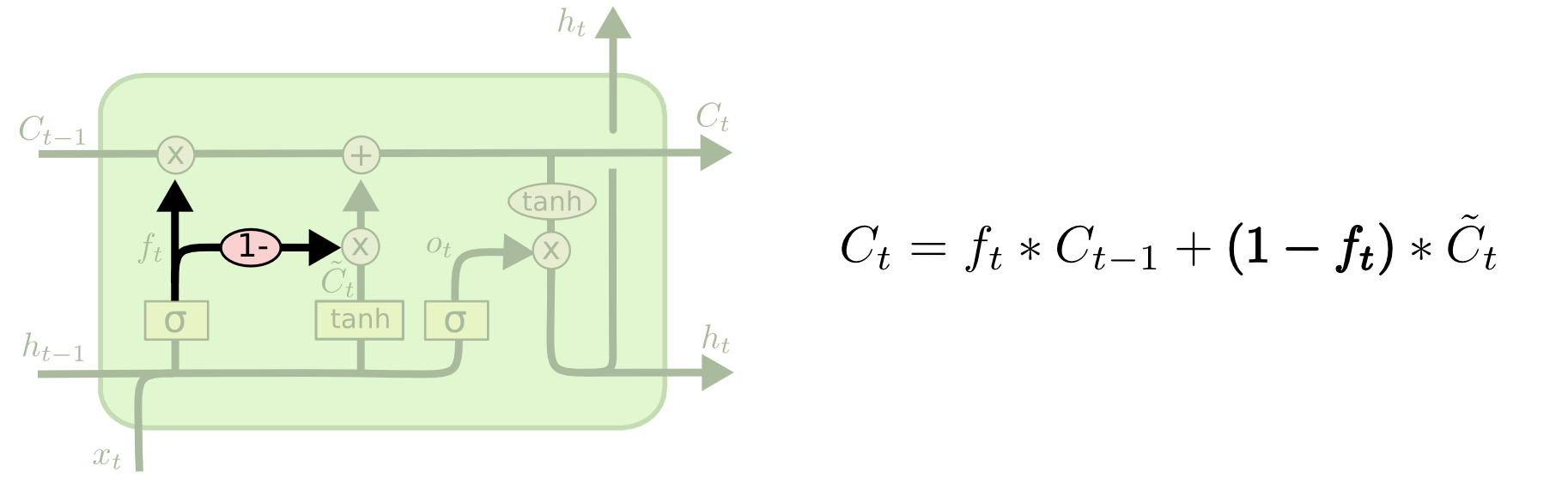

# Convex Combination2 (coupled forget and input gates)

Forget to remember, remember to forget. The total amount of information stays the same.

# GRU

Understanding LSTM Networks – colah’s blog Many pics in this article is from colah’s blog. ↩︎